AI fatigue may strengthen trust in news media, but it won’t stop harm against minorities

(Canva/VALENTIN FLAURAUD/AFP via Getty Images)

The majority of news editors think the huge expansion in misinformation and AI-generated content will actually increase support amongst audiences for the human-generated media sector. But, that assumes people can always tell the difference and knowingly choose what types of content they engage with. And that is the most dangerous thing – a lot can’t.

On Monday (12 January), the Reuters Institute at Oxford University dropped its latest ‘Journalism, media, and technology trends and predictions‘ report, based on a survey of 280 digital leaders from 51 countries and territories around the world – although there is always a very heavy focus on Europe in its pool of respondents.

A comprehensive what’s-what of the key changes impacting news media for the year ahead, it is insightful reading for anyone working in, or interested in, the business of making news. The Fix labelled it “the ultimate gospel” and, honestly, that’s a pretty on the nose description because it is all I ever see journos on my timelines talking about on the day it is released.

The 2026 report is unsurprisingly heavily focused on the continued rise in Artificial Intelligence (AI) across the media sector, with in-depth discussion of the impact of Google’s AI Overviews on click-rate, potential new revenue streams from AI chatbot referrals and all the ways in AI is being used in newsrooms today, such as in coding, distribution, newsgathering and content creation.

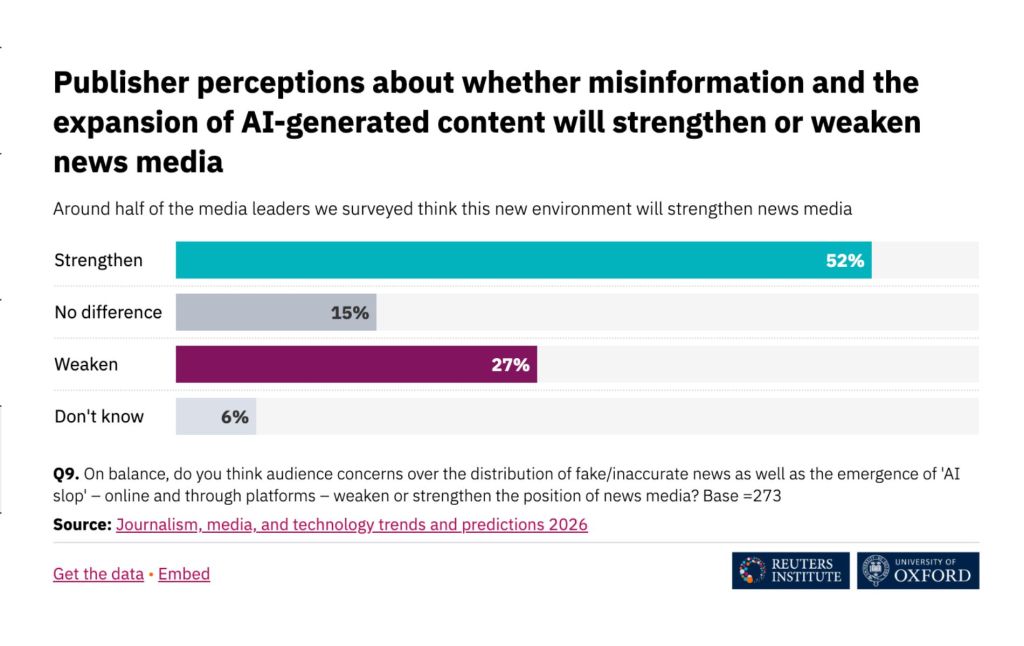

One interesting point the report raises though is the perspective of news leaders that the absolute barrage of misinformation and “AI slop” people are seeing every which way on the internet will actually lead to strengthened support for the news media – the human-made kind, that is. Of those surveyed, more than half (53 per cent) feel this way, whilst just over a quarter say it will weaken support for the news media, six per cent not sure and 15 per cent not believing it will make a difference

Indeed, Edward Roussel, head of digital at The Times and Sunday Times, was quoted in the report as saying: “As AI sweeps the world, there will be growing demand for human-checked, high-quality journalism.”

Nic Newman, longtime author of the reports, though 2026 will be his last year, said such views “may be wishful thinking”, though.

“If audiences are unsure what is true or false on the internet, this does not necessarily mean they will turn back to news media,” Newman said.

“Surveys show that a significant proportion in most countries deeply distrust the news media overall, with others happy to rely on user comments or even AI chatbots to check the facts.

“At the same time, it is in the interest of platforms to ensure that misinformation and ‘AI slop’ does not degrade the experience (so called ‘enshittification’) and undermine their business models.”

As the glass half-empty person I am, who spends a lot of my job as a journalist debunking fake news about the LGBTQ+ community, I also agree it’s pretty wishful thinking to believe people will get sick of AI-generated content and make the choice to only engage with, trust and support news content that is sourced and created by human beings.

As each viral instance of rabbits bouncing on a trampoline, the Pope in a puffer jacket or a Pride flag and Donald Trump being tackled and arrested (all examples of AI) show, a lot of people simply cannot tell the difference between what is real and fake – or at the very least don’t care.

This poses a problem not only for the media sector, public trust of which is at an all time low, but for the minority groups – such as the LGBTQ+ community and refugees – who are often used as cannon fodder for such fake news.

Now, not all fake content is inherently ‘bad’, per se – and I do say that with a grain of salt.

Take the example of the cutesy AI video of rabbits bouncing on a trampoline, which has amassed tens of millions of views across various video sharing platforms, on the scale of harm – excluding the environmental impacts of AI – it’s pretty low. A much older example of some harmless fake news was the BBC’s spaghetti-tree hoax from 1957, which saw the broadcaster run a segment on April Fools’ Day about a family in southern Switzerland ‘harvesting’ cooked spaghetti from a “spaghetti tree”. Obviously, spaghetti does not grow from trees and is made from a mixture of flour and eggs, but at the time the long, thin pasta was a mystery of a food in the UK, so plenty of easily convinced viewers contacted the BBC to ask for advice on growing their own spaghetti trees. It was a proper prank, a classic ‘ha ha, gotcha!’, that no news organisation has ever been able to top since – though they all try, every year.

Sometimes fake news is fake for satirical reasons, sure, but sometimes it is fake – purposefully so – for actively harmful and dangerous reasons, like disrupting democracy.

As I investigated last year, on Facebook there was a huge increase in anti-LGBTQ+ disinformation falsely framed as ‘legitimate’ news content and published by news sources purporting to be trustworthy. This content included faux quotes being attributed to professional athletes about hot-button issues like trans inclusion in sports and out public figures like Sam Smith being quoted as updating their gender, pronouns and relationship status with totally false – and frankly bizarre – information.

The goal of this content is not to spread informative news but to divide communities over contentious issues like trans rights and within that make people question their own government’s policies and legislation, a tactic known in national security circles as Foreign Information Manipulation and Interference (FIMI).

The EU defines FIMI as a “pattern of behaviour that threatens or has the potential to negatively impact values, procedures and political processes” wherein such activity “often seeks to stoke polarisation and divisions inside and outside the EU while also aiming to undermine the EU’s global standing and ability to pursue its policy objectives and interests”.

A 2023 EU report entitled ‘FIMI targeting LGBTIQ+ people: Well-informed analysis to protect human rights and diversity’ – the first of which to specifically focus on anti-LGBTQ+ FIMI – found 31 cases related to FIMI and the queer community between June 2022 and July 2023, with half of the cases attributed to Russia.

The report found that anti-LGBTQ+ FIMI is politically motivated and seeks to harden public opinion in opposition to LGBTQ+ rights, along with – as I already mentioned – sowing divisions in communities and undermining democracy.

“The reach of FIMI cases targeting LGBTIQ+ goes beyond this community,” the report reads. “According to the evidence collected during the investigation, FIMI actors aimed to provoke public outrage not only against named LGBTIQ+ individuals, communities, or organisations – but also against government policies, the concept of democracy as such, and local or geopolitical events.

“While undermining LGBTIQ+ people was a common theme in many of the FIMI cases identified, the overarching narrative in many of them was that the West is in decline.

“By leveraging the narrative of decline, FIMI threat actors attempt to drive a wedge between traditional values and democracies.

“They claim that children need to be protected from LGBTIQ+ people, that LGBTIQ+ people get preferential treatment in sports and other fields – to the detriment of others – and that Western liberal organisations or political groups are demonstrably weak because they surrender to ‘LGBTIQ+ propaganda’.”

It might not surprise you, but all of these comments underneath the posts shared on Facebook that pushed this blatantly obvious anti-LGBTQ+ disinformation were from people who genuinely believed it was true and were quick to decry such so-called wokery, like trans people having rights.

You only need to look at the events over on X, formerly known as Twitter, of the last few days, where users have been digitally undressing women and children using the platform’s AI chatbot Grok, to see the real life harms of AI and fake content in real time action.

The act of abuse, the anonymity of the abuser, the impact of the victim, it’s clear to see.

Coming back to the point of this piece, the idea that AI fatigue may lead more people to support human-made news is hopeful at best, naive at worse.

People may tire of AI content, as many already are, may change their settings on social media so they no longer see it, may call out friends and family when they repost or share fake news talking points – but those are just some people, not all.

There are many people out there who cannot choose to disconnect from AI or the fake news content machine because they cannot discern what exactly is fake. If you want some scary facts, Ofcom’s 2025 Adults’ Media Use and Attitudes Report found just a third (34 per cent) of adults aware of AI said they feel confident recognising AI-generated content online – that is 66 per cent who do not.

As journalists and news leaders, those are the people who we should be focusing our attention on, not the one’s who already have the digital know-how and will eventually throw their support behind the human-made media, but the one’s with low media literacy and who will share a story about Sam Smith identifying as a cabbage from a Facebook page called ‘News You Should Care About If You Love Briton!!!!’ and think it’s real.

That is the real danger.